In this article i describe a quick way to have zeepelin running locally

Intro#

In this article i describe a quick way to have zeepelin running so that you could quickly testing some Spark application.

NOTE: This procedure shouldn’t be used in production environments has you should setup the Notebook with auth and connected to your local infrastructure.

Requirements#

- One should have a docker environment setup. Check my previous {% post_link dockerclean article %} if you need some help with that

- Docker-compose

Setup#

- Create a folder named zeepelin

mkdir docker-zeepelin

- Create a

datawhere you could put some data to analyse.

mkdir -p docker-zeepelin/data

- Create the following

docker-compose.ymlfile in dirdocker-zeepelin:

version: '2'

services:

zeppelin:

ports:

- "8080:8080"

volumes:

- ./data:/opt/data

image: "dylanmei/zeppelin"

- Launch docker-compose

sudo docker-compose up -d

- That’s it you should now be able to access http://localhost:8080

Test it#

- Lets download a demo file to our

datadir.

curl -s https://api.opendota.com/api/publicMatches -o ./data/OpenDotaPublic.json

Yeah! I kinda like Dota2 so this makes sense :D

- Create a new NoteBook in the web Interface and use the following code

%spark

val df = sqlContext.read.json("file:///opt/data/OpenDotaPublic.json")

df.show

Hit: Shift-Enter

- Let’s register this dataframe as temp table and create some visuals

%spark

df.registerTempTable("publicmatches")

- Create the following to generate visualizations

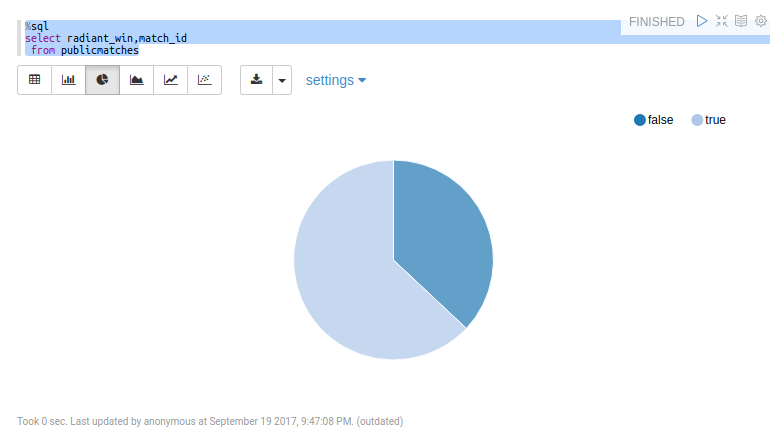

%sql

select radiant_win,match_id

from publicmatches

Guess i need to start playing on Radiant side :D

Well and that’s it.

Cheers, RR